from andi_datasets.datasets_challenge import challenge_theory_dataset1. Explore the models

How to use this notebook:

If you are interested in quick overview on how to create datasets for the ANDI challenge, please go to Section 1. For further details on the creation of datasets of theoretical trajectories, please go to Section 2. To learn how to access the various theoretical diffusion models contained in the andi_datasets, please go to Section 3.

Creating the ANDI 1 challenge dataset

Before starting, make sure that you know the details of the competition. You can learn about every in the associated webpage. A quick overview: there are three tasks: 1) inference of the anomalous diffusion exponent, 2) classification of the diffusion model and 3) segmentation of trajectories. For each task, there are three subtasks, one per dimension. To create a dataset for every task and dimension, each e.g. of \(N=10\) trajectories, you just need to run

X1, Y1, X2, Y2, X3, Y3 = challenge_theory_dataset(N = 10)Creating a dataset for task(s) [1, 2, 3] and dimension(s) [1, 2, 3].

Generating dataset for dimension 1.

Generating dataset for dimension 2.

Generating dataset for dimension 3.X1, X2 and X3 correspond to the trajectories of Task 1, 2 and 3, respectively, while Y1, Y2 and Y_3 correspond to the labels. Each of these list contains three list, each one containing the trajectories for each dimension, in ascending order. For example, X2[2] contains the trajectories for Task 2 for dimension 3. If you only want to generate trajectories for a specific Task and dimensions, you can specify it as

X1, Y1, X2, Y2, X3, Y3 = challenge_theory_dataset(N = 10, tasks = 1, dimensions = 3)Creating a dataset for task(s) 1 and dimension(s) 3.

Generating dataset for dimension 3.In this case all the lists but the ones specified will be empty. For example:

print('Task 3, dimension 1 is empty: len(X3[0]) = '+ str(len(X3[0])))

print('But Task 1, dimension 3 has our 10 trajectories: len(X1[2]) = '+str(len(X1[2])))Task 3, dimension 1 is empty: len(X3[0]) = 0

But Task 1, dimension 3 has our 10 trajectories: len(X1[2]) = 10To avoiding having to create a dataset every time we want to use it, you can use the option save_dataset, which will save a file for every dataset of trajectories (named task1.txt, task2.txt and task3.txt) and their corresponding labels (named ref1.txt, ref2.txt and ref3.txt). The first column of each file corresponds to the dimension of the trajectory.

X1, Y1, X2, Y2, X3, Y3 = challenge_theory_dataset(N = 10, save_dataset = True)Creating a dataset for task(s) [1, 2, 3] and dimension(s) [1, 2, 3].

Generating dataset for dimension 1.

Generating dataset for dimension 2.

Generating dataset for dimension 3.Now that we saved the dataset, we can load it with the option load_dataset:

X1, Y1, X2, Y2, X3, Y3 = challenge_theory_dataset(N = 10, load_dataset = True)Creating a dataset for task(s) [1, 2, 3] and dimension(s) [1, 2, 3].Take into account that if you saved \(N=10\) trajectories, you will only be able to load this 10, even if you ask for \(N=20\).

By default, the trajectories in this dataset will have a maximum length of 1000 steps and a minimum length of 10. To change that, you can use the options max_T and min_T. Take into account that you will need trajectories of 200 steps for task 3. Shorter trajectories will raise an error.

X1, Y1, X2, Y2, X3, Y3 = challenge_theory_dataset(N = 10, min_T = 15, max_T = 200)Creating a dataset for task(s) [1, 2, 3] and dimension(s) [1, 2, 3].

Generating dataset for dimension 1.

Generating dataset for dimension 2.

Generating dataset for dimension 3.The rests of the optional inputs of the function (load_trajectories, save_trajectories, N_save and T_save) refer to the saving/loading of the trajectories used to generate the datasets, in numpy format. See Section 2 for more details on this.

Creating trajectories from theoretical models

For this, we will the class andi_datasets.datasets_theory class. First, lets import some auxiliary packages:

import numpy as np

from matplotlib import pyplot as pltFirst, let’s define the class. One thing we can do is to access the available diffusion models:

from andi_datasets.datasets_theory import datasets_theory

AD = datasets_theory()

AD.avail_models_name['attm', 'ctrw', 'fbm', 'lw', 'sbm']The label asigned to each model is given by its position in the previous list. Now, we will generate a dataset containing \(N=2\) trajectories per model and exponent. We will consider anomalous exponents \(\alpha = [0.7, 0.9]\) and lenghts \(T=5\) from the models attm and fbm.

dataset = AD.create_dataset(T = 4, N_models = 2, exponents = [0.7, 0.9], models = [0, 2])

print(np.round(dataset,2))[[ 0. 0.7 0. 0.4 -0.15 0.04]

[ 0. 0.7 0. -0.49 -1.02 -0.87]

[ 0. 0.9 0. 0.04 0.85 0.64]

[ 0. 0.9 0. 1.26 2.33 2.38]

[ 2. 0.7 0. 0.08 2.09 1.03]

[ 2. 0.7 0. 0.18 0.61 1.12]

[ 2. 0.9 0. 0.12 1.12 0.33]

[ 2. 0.9 0. 0.08 -0.59 -0.45]]Each row corresponds to a trajectory. The first column is the model label, while the second column labels the exponent. The rest of the row is the trajectory. The default dimension for the trajectories is one. We will see later how to create trajectories with more dimensions.

To create datasets of trajectories with higher dimensions, you can use the input dimensions. The trajectories will be outputed as a single row of the dataset matrix. This means that if you create a dataset of trajectories of length \(T = 10\) and dimensions 2, the number of columns of the dataset will be 2 (the labels) + \(2\cdot 10\) (the trajectory). Here is an example:

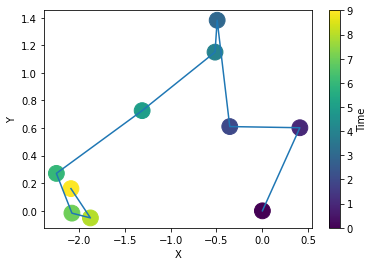

dataset = AD.create_dataset(T = 10, N_models = 1, exponents = [0.7], models = [2], dimension = 2)

print(np.round(dataset[0], 2))[ 2. 0.7 0. -1.14 -1.17 -1.36 -1.62 -0.88 -0.85 -1.05 -1.23 -0.57

0. -0.37 -0.33 -0.71 -0.25 0.39 0.7 0.05 -0.18 -0.24]plt.plot(dataset[0,2:12], dataset[0, 12:], '-')

plt.scatter(dataset[0,2:12], dataset[0,12:], c=np.arange(10), s = 250)

plt.colorbar().set_label('Time'); plt.xlabel('X'); plt.ylabel('Y');

Saving and loading datasets

datasets_theory allows also to save and load datasets, avoiding the need of generate new trajectories each time we want to create a dataset. There exist two optional inputs to the function create_datasets: save_trajectories and load_trajectories, which if True, save and load datasets, respectively. A dataset for each exponent and model considered are saved in a .h5py file whose name is the model considered. Each file contains datasets for each exponent.

In these cases, two important variables take also into play, T_save and N_save. These set the lenght and number of trajectories to save for each exponent and model. They are set as default to T_save\(=10^3\) and N_save\(=10^4\) as this allows to create any other combinantion of dataset. See that in the ANDI challenge we will never consider trajectories longer than \(T=10^3\).

The name of the datasets inside the .h5py file is then (exponent)_(T_save)_(N_save).

Using the default values for this two variables, let’s save datasets for the previous examples:

dataset = AD.create_dataset(T = 4, N_models = 2, exponents = [0.7, 0.9], models = [0, 2],

save_trajectories = True, path = 'datasets/')

print(np.round(dataset,2))100%|█████████████████████████████████████████| 1000/1000 [00:02<00:00, 365.01it/s, exponent=0.7, model=attm, saving=1]

100%|█████████████████████████████████████████| 1000/1000 [00:06<00:00, 156.58it/s, exponent=0.9, model=attm, saving=1]

100%|█████████████████████████████████████████| 1000/1000 [00:00<00:00, 2349.36it/s, exponent=0.7, model=fbm, saving=1]

100%|█████████████████████████████████████████| 1000/1000 [00:00<00:00, 2394.90it/s, exponent=0.9, model=fbm, saving=1][[ 0. 0.7 0. -0.16 -1.69 -0.53]

[ 0. 0.7 0. -0.45 -0.9 -0.39]

[ 0. 0.9 0. 0.08 0.69 0.15]

[ 0. 0.9 0. -1.65 0.29 2.47]

[ 2. 0.7 0. -0.02 0.11 0.15]

[ 2. 0.7 0. 0.01 0.02 0.05]

[ 2. 0.9 0. 0. -0.04 -0.06]

[ 2. 0.9 0. -0.03 0.05 0.03]]If the datasets were already saved, we can load them instead:

dataset = AD.create_dataset(T = 4, N_models = 2, exponents = [0.7, 0.9], models = [0, 2],

load_trajectories = True, path = 'datasets/')

print(np.round(dataset,2))[[ 0. 0.7 0. 1.02 0.9 0.64]

[ 0. 0.7 0. -0.03 0.07 -0.96]

[ 0. 0.9 0. -0.68 0.31 1.78]

[ 0. 0.9 0. -0.45 1.02 2.24]

[ 2. 0.7 0. -0.02 -0.05 0.06]

[ 2. 0.7 0. -0.05 -0.03 -0.02]

[ 2. 0.9 0. -0.03 0.06 0.07]

[ 2. 0.9 0. 0.03 0.02 0.02]]Creating noisy datasets

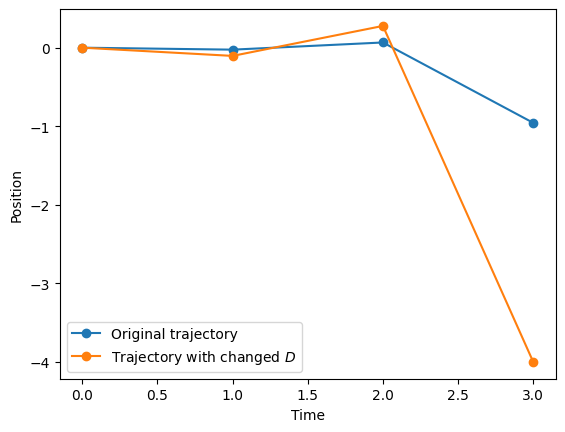

There are two ways of adding noise to the trajectories: either by varying their diffusion coefficient or by adding noise to each of the trahectory’s points. You can do it with the functions create_noisy_diffusion_dataset or create_noisy_localization_dataset, respectively. These functions allow to either create a new dataset to which we will add the noise or give as input the dataset. We will use now the dataset we created in previous cells.

Noisy diffusion coefficients The function create_noisy_diffusion_dataset changes the diffusion coefficient of a given trajectory. This function works similarly to create_dataset, but has as optional input diffusion_coefficients. You can use this variable to change the diffusion coefficient of the input trajectories at your please. If you don’t give an input to it, they will be drawn randomly from a Gaussian distribution with variance 1. See that, as \(D\) may be negative, the trajectories can be reversed.

dataset_noise_D = AD.create_noisy_diffusion_dataset(dataset.copy(), T = 4)

plt.plot(dataset[1,2:],'o-', label = 'Original trajectory')

plt.plot(dataset_noise_D[1,2:],'o-', label = r'Trajectory with changed $D$')

plt.xlabel('Time'); plt.ylabel('Position')

plt.legend();

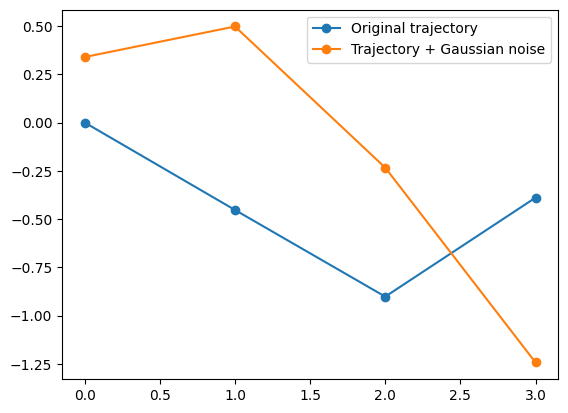

Localization noise The class also allows to create noisy trajectories via create_noisy_localization_dataset. This functions works as create_dataset but has three extra optional input parameters: noise_func, sigma and mu. If noise_func is False, the function generates a dataset with Gaussian noise with variance sigma and mean mu. Keep in mind that the function will never save noisy trajectories. The saved/loaded trajectories will never have noise, which will be added a posteriori. Here is an example with Gaussian noise:

# The default value of noise_func is False, so we don't need to input it.

# Default value of sigma is 1 and mu is 0.

dataset_noisy = AD.create_noisy_localization_dataset(dataset.copy(), T = 4)

print(np.round(dataset_noisy,2))[[ 0. 0.7 1.22 2.04 0.48 -0.89]

[ 0. 0.7 0.59 1.37 -0.36 -0.77]

[ 0. 0.9 -0.7 -1.18 0.87 0.94]

[ 0. 0.9 0.17 0.32 1.95 1.64]

[ 2. 0.7 -0.31 -1.24 0.17 0.76]

[ 2. 0.7 -1.35 1.16 2.18 -1.73]

[ 2. 0.9 2.14 0. 1.32 -0.69]

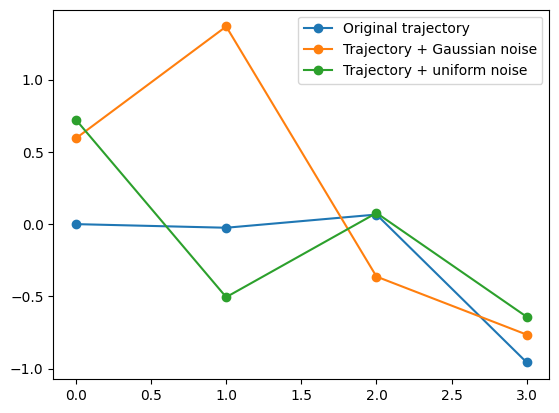

[ 2. 0.9 -0.64 0.39 -0.22 0.41]]plt.plot(dataset[1,2:],'o-', label = 'Original trajectory')

plt.plot(dataset_noisy[1,2:],'o-', label = 'Trajectory + Gaussian noise')

plt.legend();

You can also define your own noise function. The noise created by this functions will be added to each of the trajectories of the dataset. The input must be the trajectories and the output has to be of equal size of the trajectories. The output of this matrix will be added to the trajectories.

# Uniformly distributed noise

def uniform_noise(trajs):

N, M = trajs.shape

return np.random.rand(N, M)*(2*np.random.randint(2, size = (N, M))-1)

dataset_uniform = AD.create_noisy_localization_dataset(dataset.copy(), T = 4,

noise_func=uniform_noise)

print(np.round(dataset_uniform,2))[[ 0. 0.7 -0.37 0.53 1.29 0.97]

[ 0. 0.7 0.72 -0.51 0.08 -0.64]

[ 0. 0.9 -0.86 -0.91 -0.22 2.52]

[ 0. 0.9 -0.94 -0.38 0.92 3.15]

[ 2. 0.7 -0.76 0.49 0.61 -0.12]

[ 2. 0.7 0.91 0.42 0.62 -0.75]

[ 2. 0.9 0.77 -0.98 -0.51 0.32]

[ 2. 0.9 0.14 0.35 0.85 -0.69]]plt.plot(dataset[1,2:],'o-', label = 'Original trajectory')

plt.plot(dataset_noisy[1,2:],'o-', label = 'Trajectory + Gaussian noise')

plt.plot(dataset_uniform[1,2:],'o-', label = 'Trajectory + uniform noise')

plt.legend();

Creating segmented datasets

The class datasets_theory also allows to create trajectories which are made of two different diffusion models or anomalous exponent, at a given changing point. Such function has the name create_segmented_dataset. Following the ANDI challenge guidelines, the output trajectories will have length 200. However, you can choose an arbitrary length with the variable final_length. The input datasets must have at least the same size as final_length. There is also the option to randomly shuffle the input datasets from which the segmented output dataset will be generate, throught the variable random_shuffle.

# First we define two datasets. Let's take same anomalous exponent but various models

dataset1 = AD.create_dataset(T = 200, N_models = 2, exponents = [0.7], models = [0, 1, 2, 4])

dataset2 = AD.create_dataset(T = 200, N_models = 2, exponents = [0.7], models = [0, 1, 2, 4])

# Now we give them to the desired function

seg = AD.create_segmented_dataset(dataset1, dataset2)The first 5 elements of every row of the variable seg label the trajectory. First point is the changing time, second and third, and fourth and fifthe are the labels of the model and exponent of the input trajectories, respectevely.

seg[:, :5]array([[145. , 0. , 0.7, 0. , 0.7],

[ 91. , 0. , 0.7, 0. , 0.7],

[ 16. , 1. , 0.7, 1. , 0.7],

[ 88. , 1. , 0.7, 1. , 0.7],

[100. , 2. , 0.7, 2. , 0.7],

[ 82. , 2. , 0.7, 2. , 0.7],

[175. , 4. , 0.7, 4. , 0.7],

[189. , 4. , 0.7, 4. , 0.7]])As we did not shuffle data and dataset1 and dataset2 have the trajectories ordered by model, there are no mixes between different models. This can be done thanks to the random_shuffle variable:

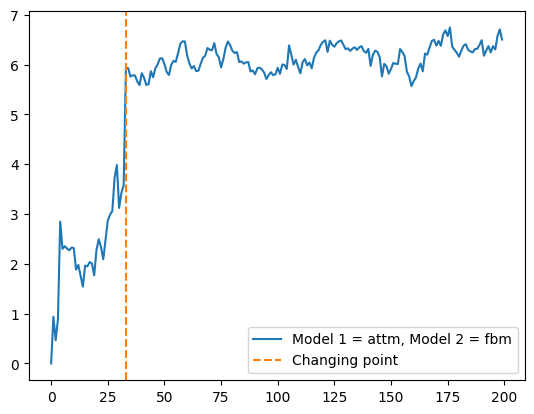

seg = AD.create_segmented_dataset(dataset1, dataset2, random_shuffle = True)

seg[:, :5]array([[ 33. , 0. , 0.7, 2. , 0.7],

[ 86. , 2. , 0.7, 1. , 0.7],

[ 74. , 0. , 0.7, 0. , 0.7],

[132. , 1. , 0.7, 2. , 0.7],

[140. , 1. , 0.7, 4. , 0.7],

[ 75. , 4. , 0.7, 0. , 0.7],

[ 12. , 2. , 0.7, 4. , 0.7],

[163. , 4. , 0.7, 1. , 0.7]])plt.plot(seg[0,5:], label = 'Model 1 = '+AD.avail_models_name[int(seg[0, 1])]+', Model 2 = '+AD.avail_models_name[int(seg[0, 3])])

plt.axvline(seg[0,0], c = 'C1', ls = '--', label = 'Changing point')

plt.legend();

Accesing the diffusion models from models_theory

All the theoretical diffusion models available in andi are collected in the class models_theory(). We can access them with a simple import :

from andi_datasets.models_theory import models_theory

# We will also need the following libraries

import inspectThere exist three subclasses, one for each dimensions. To see the available models for each dimension, you can use the following:

for dimensions in range(3):

if dimensions == 0:

models = models_theory()._oneD()

elif dimensions == 1:

models = models_theory()._twoD()

elif dimensions == 2:

models = models_theory()._threeD()

available_models = inspect.getmembers(models, inspect.ismethod)

print('\nThe availailabe models for dimension '+str(dimensions+1)+' are:')

[print('- '+x[0]) for x in available_models]

The availailabe models for dimension 1 are:

- attm

- ctrw

- fbm

- lw

- sbm

The availailabe models for dimension 2 are:

- attm

- ctrw

- fbm

- lw

- sbm

The availailabe models for dimension 3 are:

- attm

- ctrw

- fbm

- lw

- sbmOne dimensional trajectories

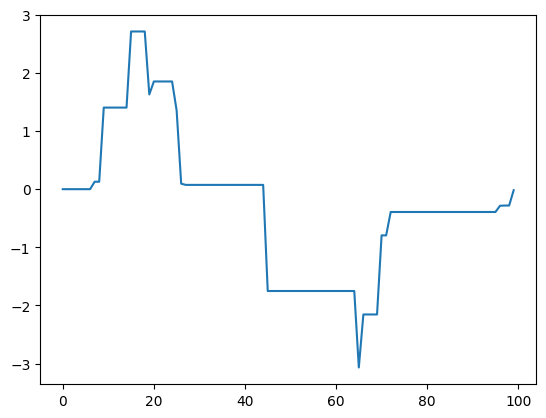

For this example, we will create a trajectory of a 1D continuous time random walk (ctrw). All the model functions must have as inputs the length of the trajectories and the anomalous exponent \(\alpha\):

oneD = models_theory()._oneD()

traj = oneD.ctrw(T = 100, alpha = 0.8)

plt.plot(traj)

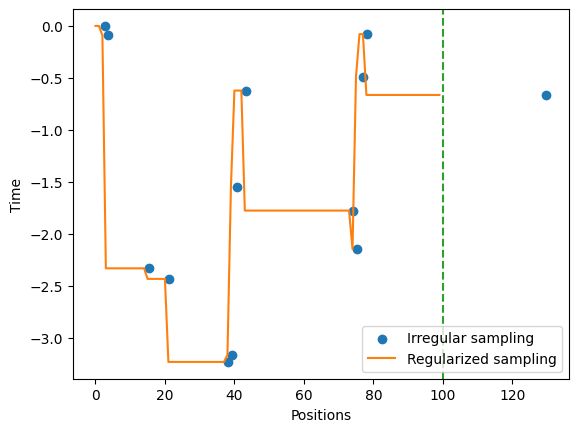

Some diffusion models are constructed from a collection of positions at different sampling times. This means that their output array does not have \(T\) points, but rather number \(m\) of sampled posisition. In order to acces such kind of information, some model generators have the optional parameter regulare_time, which if False, makes the function to return an array with two rows and \(m\) columns. The first row are the sampling times and the second are the positions. CTRW is an example of these models:

traj_nonR = oneD.ctrw(T = 100, alpha = 0.8, regular_time = False)

traj_nonR.shape(2, 13)If regulare_time = True (default value), the trajectory is fed to the function regularize from utils_trajectories, which transforms the trajectory to regular sampling times. Below is an example with the previously defined trajectory. The offset is due to the need of rounding the times coming from the variable traj_nonR[1].

from andi_datasets.utils_trajectories import regularize

traj_R = regularize(positions = traj_nonR[1,:], times = traj_nonR[0, :], T = 100)

fig, ax = plt.subplots()

ax.scatter(traj_nonR[0,:], traj_nonR[1, :], c = 'C0', label = 'Irregular sampling')

ax.plot(traj_R, c = 'C1', label = 'Regularized sampling')

ax.set_xlabel('Positions'); ax.set_ylabel('Time'); ax.axvline(100,ls = '--', c = 'C2')

ax.legend();

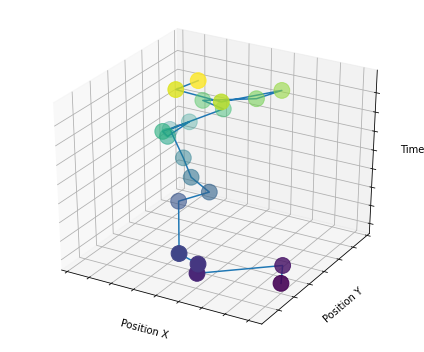

Two dimensional trajectories

We will proceed with an example of a 2D trajectory coming from the ATTM model. 2D trajectories are output as an array of length \(2\times T\).

T = 20

traj = models_theory()._twoD().attm(T = T, alpha = 0.8)

print(traj.shape)(40,)from mpl_toolkits.mplot3d import Axes3D

fig = plt.figure(figsize = (6,5))

ax = fig.gca(projection='3d')

ax.plot(traj[:T], traj[T:], np.arange(T))

scat = ax.scatter3D(traj[:T], traj[T:], np.arange(T), c=np.arange(T), s = 250);

ax.set_xlabel('Position X')

ax.set_ylabel('Position Y')

ax.set_zlabel('Time')

ax.xaxis.set_ticklabels([])

ax.yaxis.set_ticklabels([])

ax.zaxis.set_ticklabels([])

plt.tight_layout();

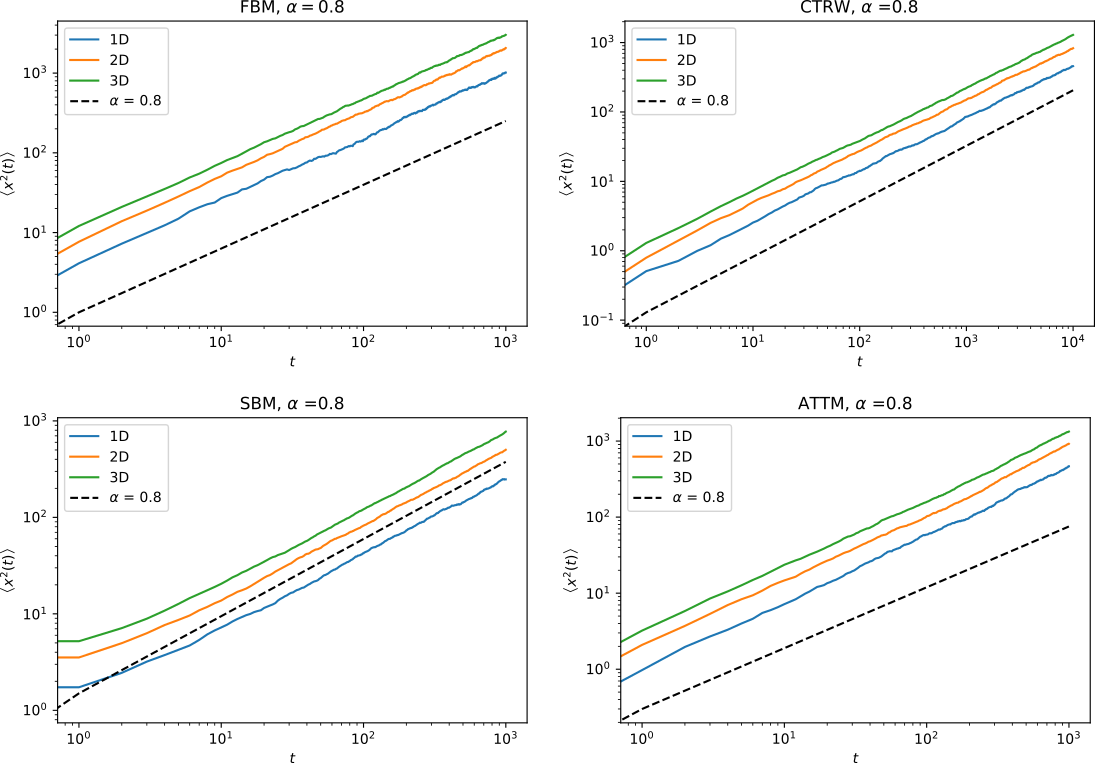

Ensemble averages of diffusion_models trajectories

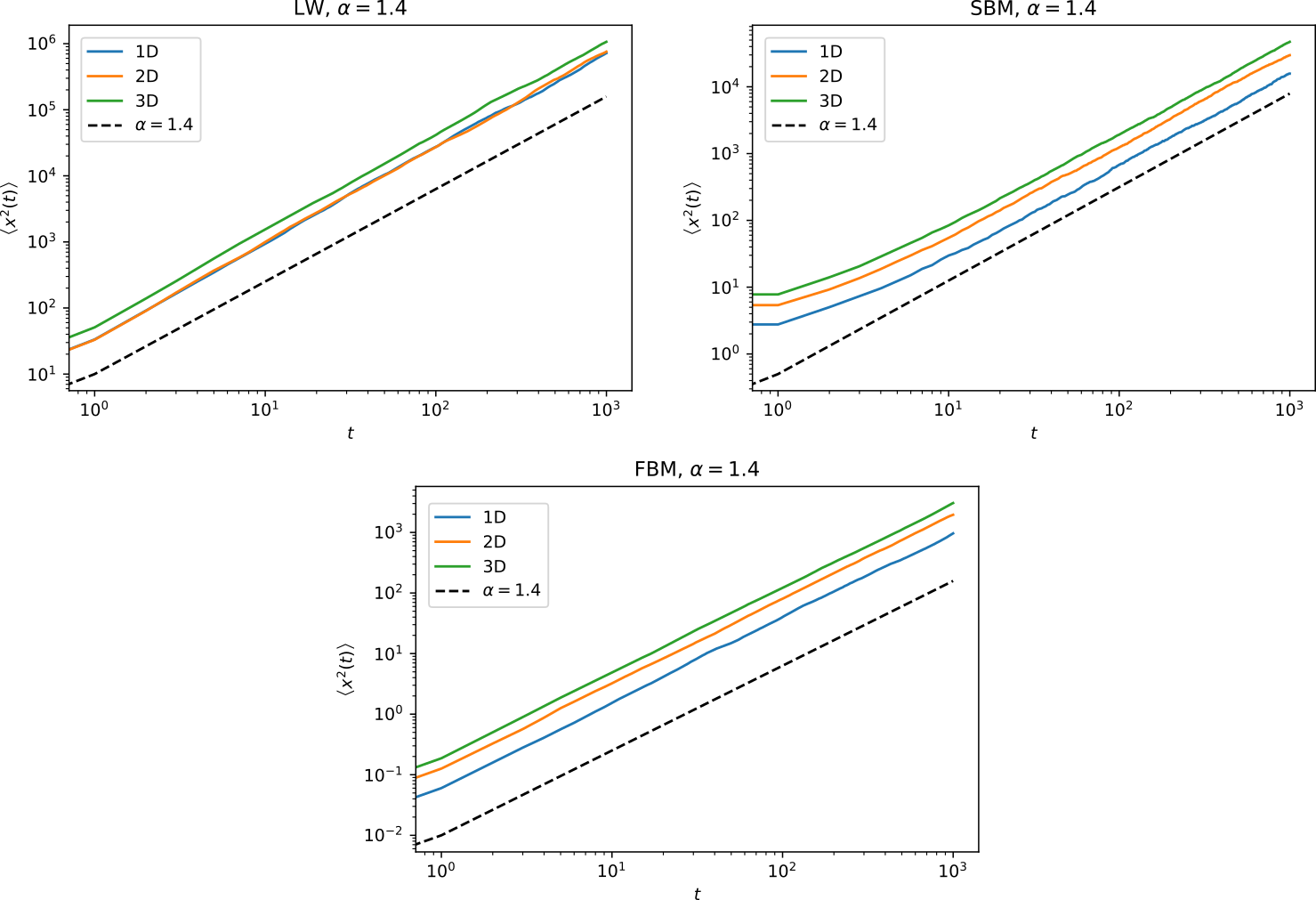

In the ANDI challenge, one of the parameters to be extracted is the anomalous exponent \(\alpha\). This exponents corresponds to the proportionality relation between the mean squared displacement averaged over \(N\) trajectories (eMSD) and time: \(\left< x^2(t) \right>\sim t^\alpha\). Here are a series of examples of eMSD calculated for various models:

(If your viewer does not allow you to see the following images, you can see them in the folder figures/)

Subdiffusive trajectories:

Superdiffusive trajectories: